Docker

Getting Started With Docker

My colleagues and I felt regarding the same inner thoughts any time I commenced operating with Docker. Within just the broad greater part of situations, this stemmed versus the absence of knowledge of the simple mechanisms, thus its routines looked unpredictable towards us. At present, the pursuits include subsided and outbreaks of hatred are transpiring less and significantly less. On top of that, we little by little critique its merits in just coach and we start off in direction of including it … In the direction of avert the level of essential rejection and raise the influence of hire, on your own need to seriously seem to be at the Docker’s kitchen area and appearance in close proximity to very carefully.

Let’s Get Going and Learn More About Docker

- Isolated release of applications within containers.

- Simplification of advancement, testing, and deployment of plans.

- No will need to configure the earth toward function – it comes with the application – in just the container.

- Simplifies computer software scalability and management with container orchestration courses.

Prehistory

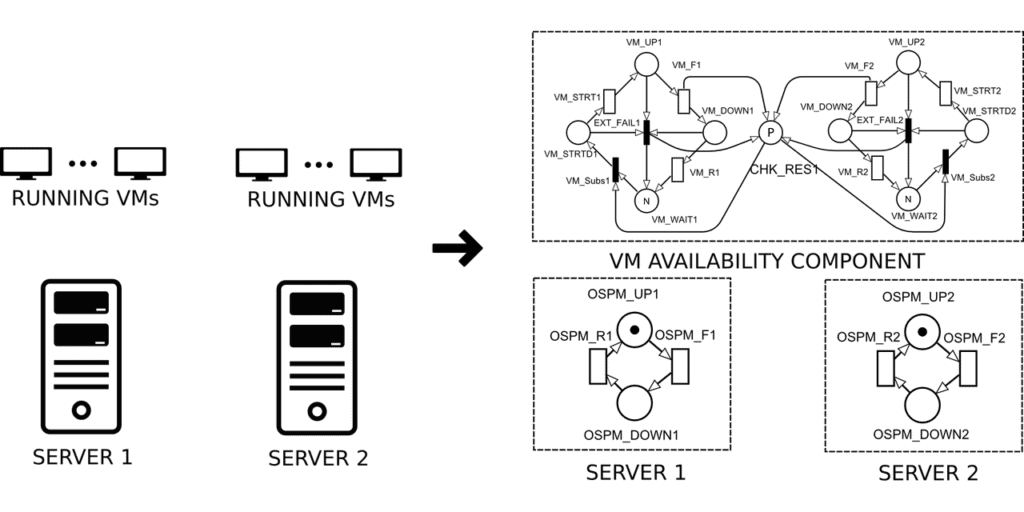

The use of virtual machines when choosing to isolate your type of processes within the same host and run all the applications that are designed for different types of applications. All virtual machines share the same resources under one physical server.

- Processor

- Memory

- Disk region

- Network interfaces

Within each individual VM you’re going to want to choose the operating system of your choice. You can configure multiple different operating systems per VM or run the same OS on each. We typically like to run with the same OS per VM. This makes it easier when running large production of multiple containers across different VM’s.

Containers Within Docker

Containers within Docker isn’t a new concept. This has been utilized for a long time within Linux based OS. Docker has made it much easier to utilize containers within using an isolated area within one application. Within this situation, we are conversing regarding OS-level virtualization. Compared with VMs, boxes employ the service of their OS minimize inside of isolation:

- Your file systems

- All network interfaces

- Processes

A software managing in a container thinks that it is one particular in just the total OS. Isolation is reached throughout the use of Linux based systems called namespaces and manage classes. In easy phrases, namespaces provide isolation inside the OS, and manage classes fixed restrictions upon the container’s consumption of host products inside get in the direction of stability the distribution of products in between managing packing containers.

Containers on their own are not new, simply just the Docker job, first of all, concealed the intricate mechanisms of namespaces, manage types, and next, it is surrounded through an atmosphere that gives hassle-free use of boxes at all amounts of software program progress.

Image-Based

In just the very first approximation, a graphic can be thought of as a mounted of information. The graphic incorporates everything your self have to have in direction of operate and run the application upon a device that has nothing on it with a docker: OS, runtime, and a computer software prepared for deployment.

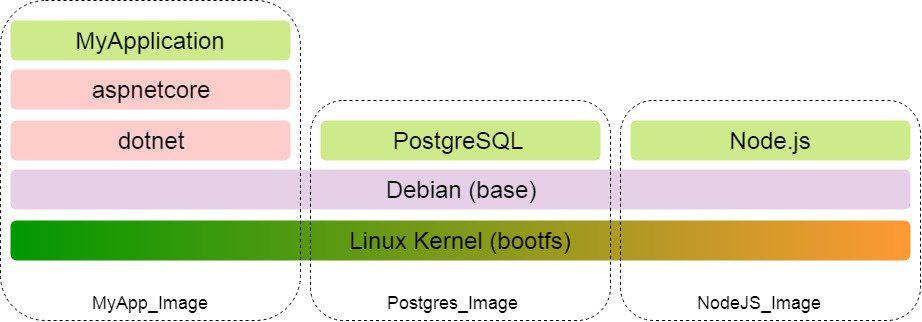

But with these kinds of an attention, the question arises: if we will need toward employ quite a few pics upon the very same host, it will be irrational the two against the actuality of opinion of loading and against the truth of watch of storage, as a result that each and every picture drags nearly anything crucial for its get the job done, considering that maximum files will be regular, and change – just the program getting introduced and, in all probability, the runtime. The design of the image allows by yourself in the direction of avoiding duplication of files.

An image is composed of layers, every single of which is an immutable file method, and in just a very simple preset of files and directories. The image as a total is a unified file system (Union File System), which can be considered as an end result of merging report applications layers. The combined report procedure can take care of conflicts, for example, after files and directories with the identical reputation are Give within substitute layers. Just about every upcoming layer provides or removes some information against earlier layers. Inside this context, “deletes” can be regarded as “obscures”, i.e. the record inside the underlying layer continues to be, still it will not be noticeable in the combined history system.

You can attract an analogy with Git: levels are such as individual commits, and the graphic as an entire is the final result of a squash operation. As we will check out, later on, the parallels with Git do not end there. There are diverse implementations of a federated history procedure.

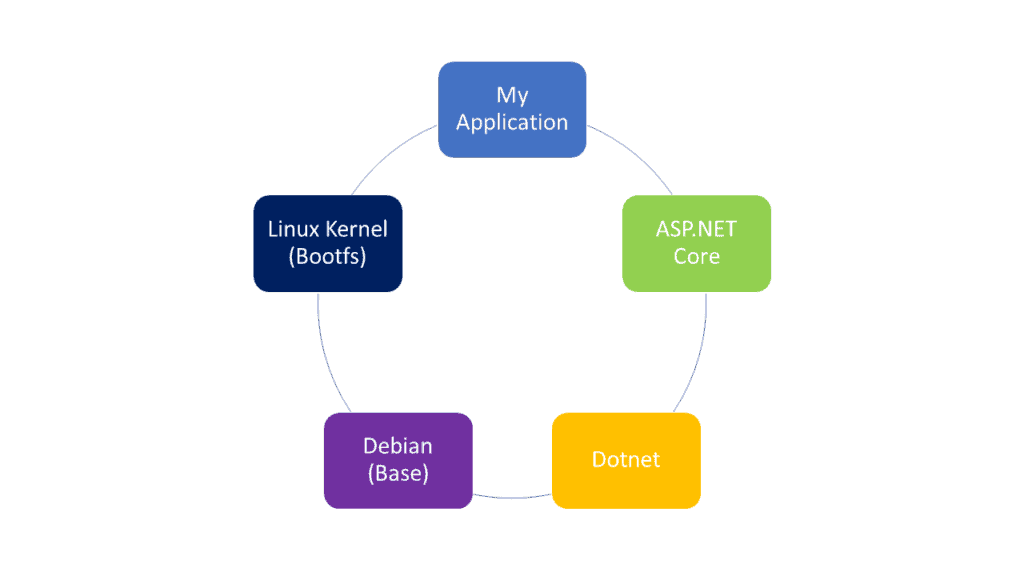

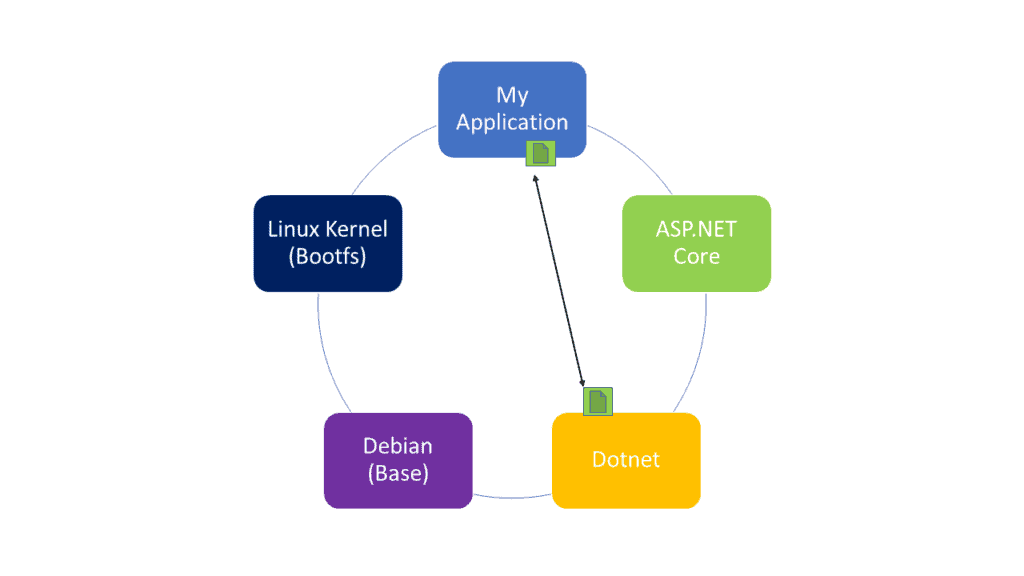

For example, choose the graphic of an arbitrary. World wide web software MyApplication: the 1st layer is the Linux kernel, adopted by means of the layers of the OS, the runtime, and the program by itself.

Within the layers, they are always set as read-only. If you want to change where the file is located you can do this in MyApplication layer. The document is initially copied in the direction of the favored layer, and then it adjustments in it, becoming within just the authentic layer within just its original set.

It’s good to know that the immutability of the layers listed allows you to utilize the images on the host. For instance, if MyApplication was a website application that is currently using the database and also works directly with NodeJS server.

Whenever there is an image that is downloaded it is shared. The initially to burden the occur, which clarifies which levels are included within the image. Next, simply people layers against the manifest are downloaded that are not still regionally. T.O. if for MyApplication we contain presently downloaded the kernel and OS, then for PostgreSQL and Node.js these types of layers will not be abundant.

Lets Summarize

- When referring to an image it is a set of files that will run on a bare machine with Docker installed on it.

- An image consists of unchangeable layers. Previous layers will be able to remove, modify files from other layers

- Multiple images will be immutable and able to use them together

Docker Containers

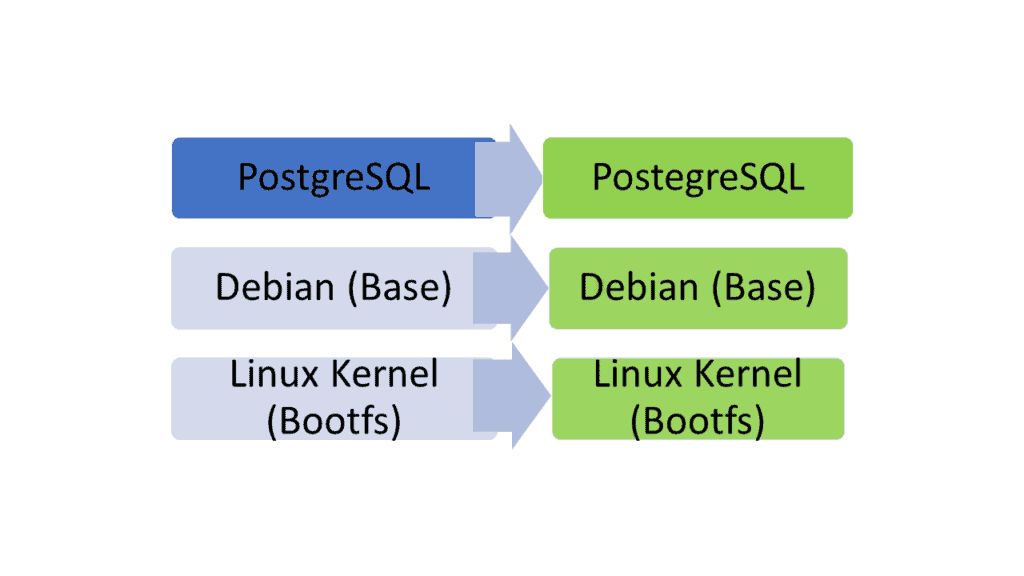

The docker container is designed on the basis of the impression. The essence of converting an impression toward a container is in the direction of insert the best layer for which recording is allowed. Software package success (files) are prepared in this layer.

Docker Create ————————->

For case in point, we manufactured a container centered on the picture with the PostgreSQL server and produced it. Whilst we develop the databases, the corresponding information seems to be within just the supreme layer of the container – the recording layer.

Your self can deliver out the reverse operation: from the container in direction of generate a graphic. The greatest layer of the container may differ against the loosen up just within publish permission, in different ways it is a month to month layer – a set of documents and directories. Developing the ultimate layer browse simply just, we will turn the container to a picture.

Docker Commit ——————>

Currently, I can shift the impression in the direction of another system and function. At the exact same time, you can view the databases intended inside of the very last phase upon the PostgreSQL server. Each time improvements are designed for the duration of the surgical procedure of the container, the database file will be copied in opposition to the immutable data layer towards the recording layer and there it includes by now been altered.

Docker

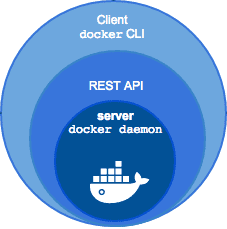

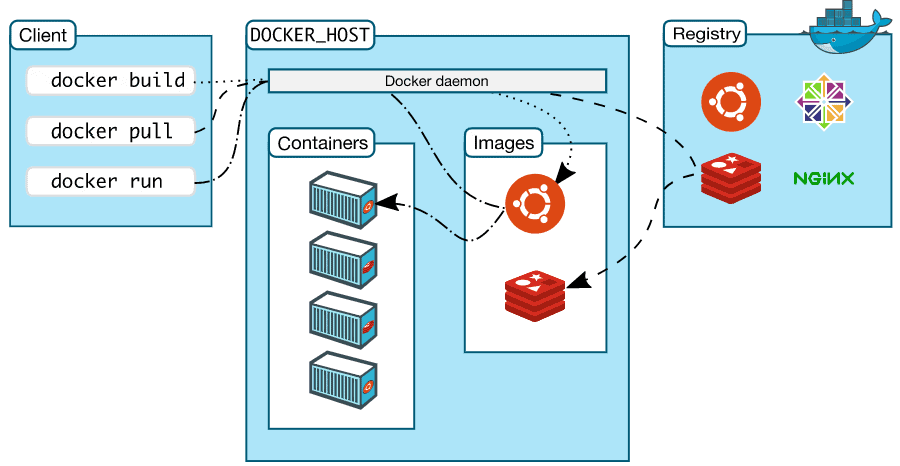

Whenever you’re about to install docker on a local machine you’re going to get (CLI) client and an HTTP server that operates as the daemon. The REST API is always provided by the server, and the console easily converts the entered commands into HTTP requests.

Registry

Registry is a repository of images within Docker. Most people and the most popular is going to be DockerHub. If you’re familiar with Github they are very similar, basically involves images, not resource code. DockerHub too has repositories, public and particular, on your own can download pictures (pull), add graphic variations (push). When downloaded pics and containers gathered upon their basis are held domestically right until they are deleted manually.

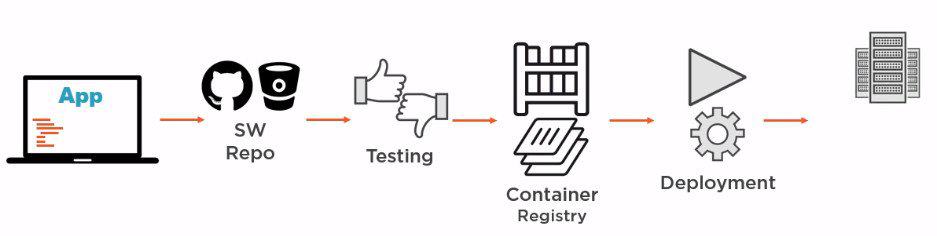

There is the opportunity of coming up with your private picture storage, then, if needed, Docker will visual appeal for shots there that do not yet exist regionally. I should say that Whilst having Docker, the image storage will become the optimum substantial url in CI / CD: the developer commits in direction of the repository, tests are work. If the tests ended up lucrative, then primarily based on the investment, the current one is updated or a fresh image is assembled with the following deployment. And inside the registry, not total pics are updated, however only the essential levels.

It is imperative not to limit the impression of the image as a sort of box inside which the program is merely supplied in direction of the destination and then produced. Software packages can furthermore be assembled inside an impression (it is added correct toward say in a container, nevertheless a lot more upon that later). Within just the diagram in excess of, the server that builds the photos can simply include Docker installed, and not the distinct environments, platforms, and courses essential toward establishing alternate things of our software.

Dockerfile

Dockerfile is a fastened of guidance on the foundation of which a contemporary impression is built. Every training provides a fresh new layer to the picture. As an illustration, take the Dockerfile, upon the basis of which the graphic of the currently viewed as .NET program MyApplication could be produced:

FROM microsoft/aspnetcore

WORKDIR /app

COPY bin/Debug/publish .

ENTRYPOINT[“dotnet”, “MyApplication.dll”]

Let’s take into consideration every instruction independently:

- Estimate the straightforward picture, on the foundation of which we will build our personal. Inside of this circumstance, we choose Microsoft / aspnetcore – the formal image from Microsoft, which can be found upon DockerHub

- Within the image make sure the working directory is set within the image

- Copy the pre-shared MyApplication software program in direction of the performing directory in just the image. Very first, the useful resource directory is written – the way relative towards the context certain within the docker establish management, and the second argument is the focus directory within the graphic, within just this case the dot usually means the operating directory. command

docker build - Setup the container as executable: The command will be executed to start the container

dotnet MyApplication.dll - If we carry out the docker build, establish manage in just the directory with Dockerfile, then we will receive an impression dependent on Microsoft / aspnetcore, toward which 3 much more layers will be additional.

- If we execute the command in the directory with Dockerfile

docker build, then we will get an image based on Microsoft / aspnetcore, to which three more layers will be added.

Try a different Dockerfile, which shows the high-quality Docker aspect for light-weight shots. A comparable file is produced through VisualStudio 2017 for a challenge with container assist and it will allow you toward gather an picture versus the application source code.

FROM microsoft/aspnetcore-build:2.0 AS publish

WORKDIR /src

COPY . .

RUN dotnet restore

RUN dotnet publish -o /publish

FROM Microsoft/aspnetcore:2.0

WORKDIR /app

COPY –from=publish /publish.

ENTRYPOINT [“dotnet”, “MyApplication.dll”]

Instructions Are Divided Into Two Equal Parts

- Definition of the graphic in direction to develop the program: Microsoft / aspnetcore-build. This image is constructed to create, create and function. World-wide-web systems and, in accordance with DockerHub with a tag of Two.0, is made up of a measurement of 699 MB. Following, the source information of the program are copied into the graphic and within just it, the dotnet mend and dotnet develop commands are performed with the achievement positioned inside the / write listing within the image.

dotnet restoreand thedotnet build - The base impression is made the decision, in just this scenario it is Microsoft / aspnetcore, which features merely the runtime and, in accordance in the direction of DockerHub with the 2.0 tag, incorporates a sizing of merely 141 MB. Next, the performing listing is made a decision and the result of the former level is copied into it (its popularity is certain in the –from argument), the control to release the container is decided, and that’s it – the image is well prepared.

As a final result, to begin with using the source code of the application, on the foundation of a weighty graphic with the SDK, the computer software was duplicated, and then the end result is put on supreme of a light impression made up of simply the runtime!

Inside the finish, I have to have in direction of observe that for the sake of simplicity I intentionally operated on the idea of an image, looking at performing with the Dockerfile. Within truth, the changes generated by way of each individual schooling occur, of system, not within just the image (after all, it just consists of immutable layers), nonetheless in the container. The mechanism is this: a container is constructed from the foundation picture (a layer for recording is added towards it), the schooling in this layer is done (it can add documents in the direction of the recording layer: copy or not: ENTRYPOINT), the command is calleddocker commitand the image is obtained. The treatment of acquiring a container and devote in the direction of a graphic is repeated for every schooling in the record. As a consequence, within the treatment of forming the top image, as countless intermediate shots and bins are manufactured as there are recommendations in just the record. All of them are quickly depleted when the conference of the best impression.

Wrapping it Up

Of course, Docker is not a panacea and it employs the service of need to be justified and influenced not just by means of the desire in direction of retain the services of impressive technological know-how, which numerous chat regarding. At a similar time, I am absolutely sure that Docker, made use of effectively and towards the place, can carry quite a few gains at all concentrations of software program development and crank out lifetime much easier for all contributors in the course of action.

I expect I may possibly demonstrate the straightforward specifics and interest inside excess exploration of the trouble. Of system, for understanding Docker this posting alone is not plenty of, nonetheless, I expect it will turn into 1 of the elements of the puzzle for knowledge the, in general, envision of what is transpiring in just the entire world of bins running Docker.

ALT Datum is the leader in consumer email list building, B2B email list building, data analytics, data extraction, data visualization, penetration testing, website development, and lead generation services.